Mesonet Essentials is a resource created in collaboration with members of the American Association of State Climatologists (AASC) and the national mesonet community. To learn more about the AASC vision, mission, goals, and membership opportunities, visit their website at www.stateclimate.org.

In this Section

- Operation and Maintenance of Your Mesonet

- Tasks to Maintain Your Mesonet and Ensure Continuous Data Quality

- Creating a Maintenance Schedule and Procedure

- Considerations that Affect Your Maintenance Schedule

- Calibrating Your Sensors

- Calibrating Your Data Loggers

- Replacement Equipment

- Managing Your Equipment Inventory

- Additional Resources for Your Maintenance Tasks

- Procedures for Handling Non-Routine Maintenance Issues

Operation and Maintenance of Your Mesonet

The tasks of designing a mesonet and installing the many automated weather stations constitute a significant portion of the preparatory work to make your mesonet operational. There are, however, several tasks and considerations that you will need to work through to not only make your mesonet fully operational, but also to maintain your mesonet.

The only effective maintenance program for an AWSN is one based on preventive maintenance that is, a maintenance program that rotates sensors, data loggers, and communications equipment before their performance degrades.

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

Paul W. Brown and Kenneth G. Hubbard, “Lessons from the North American Experience with Automated Weather Stations,” Automated Weather Stations for Applications in Agriculture and Water Resources Management: Current Use and Future Perspectives (proceedings of an international workshop, Lincoln, Nebraska, March 6-10, 2000): 21-28. http://www.wamis.org/agm/pubs/agm3/WMO-TD1074.pdf.

Tasks to Maintain Your Mesonet and Ensure Continuous Data Quality

To maintain your mesonet and ensure you are continuously acquiring quality data, you will need to perform two types of tasks:

- Routine, regularly scheduled maintenance

- Unexpected, non-routine (including emergency) servicing

Routine maintenance can be scheduled at known intervals to accommodate the typical reoccurring tasks of vegetation growth, dust accumulation on sensing instruments, and general sensor servicing.

General maintenance consists of evaluating the overall integrity of the site (e.g., structures, guy wires, adverse weathering) and trimming vegetation. Stanhill (1992) demonstrated the importance of frequently cleaning the outer hemisphere of the pyranometer. Extended periods between cleaning can result in as much as an 8.5% reduction in net solar radiation due to the deposition of dust and particles on the dome. Because of concerns about site security and integrity, only Mesonet technicians are allowed within the site enclosure. More frequent maintenance of general site characteristics, such as trimming grass and cleaning the pyranometer dome raised concerns that inexperienced local personnel may disrupt soil temperature plots, accidentally loosen or cut wires, or dent the pyranometer dome during cleaning. In addition, with more than half the sites located on privately owned land, weekly visits to sites could strain relations with the site owners.

The Arizona Meteorological Network describes emergency maintenance as such:

Emergency maintenance falls under the general category of technical maintenance and is required when a sensor, data acquisition system, or power supply falters or fails. Good preventive maintenance minimizes but never eliminates emergency maintenance. It is important to designate a person or persons that are responsible for emergency maintenance. Personnel with emergency maintenance responsibilities must have the flexibility to address emergency situations on short notice and thus should not be tied to other essential network chores such as programming or database management. Often, the individual assigned to technical maintenance also performs emergency maintenance.

The New York State Mesonet shared their experience with the need for unexpected, non-routine servicing:

The New York State Mesonet, in just its first few years, has had cows break into a site, numerous problems with mice making nests within enclosures and conduit, and bees making hives under the enclosure rain guard. We've also had two lightning strikes, and severe winds break apart an Alter shield.

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

G. Stanhill, “Accuracy of global radiation measurements at unattended, automatic weather stations,” Agricultural and Forest Meteorology 61, no. 1-2 (1992): 151-156. https://doi.org/10.1016/0168-1923(92)90030-8.

Mark A. Shafer, Christopher A. Fiebrich, Derek S. Arndt, Sherman E. Fredrickson, and Timothy W. Hughes, “Quality Assurance Procedures in the Oklahoma Mesonetwork,” Journal of Atmospheric and Oceanic Technology 17, no. 4 (2000): 474-494. http://cig.mesonet.org/staff/shafer/Mesonet_QA_final_fixed.pdf.

Paul W. Brown and Kenneth G. Hubbard, “Lessons from the North American Experience with Automated Weather Stations,” Automated Weather Stations for Applications in Agriculture and Water Resources Management: Current Use and Future Perspectives (proceedings of an international workshop, Lincoln, Nebraska, March 6-10, 2000): 21-28. http://www.wamis.org/agm/pubs/agm3/WMO-TD1074.pdf.

Creating a Maintenance Schedule and Procedure

Obviously, you can’t know beforehand when an equipment or communication issue will occur and significantly impact your mesonet’s data integrity and collection. While you can’t prevent unexpected disruptions or emergencies, you can create a routine maintenance schedule to proactively minimize the likelihood of disruptions.

An adequate maintenance program is essential to the collection of accurate data.

You may create a schedule that includes—for example—daily, weekly, biweekly, monthly, bimonthly, quarterly, and annual tasks. These routine tasks may include calibrating sensors, replacing batteries, and inspecting your mesonet’s automated weather stations to ensure the correct functioning of instruments.

The World Meteorological Organization (WMO) offers this perspective on routine maintenance:

Observing sites and instruments should be maintained regularly so that the quality of observations does not deteriorate significantly between station inspections. Routine (preventive) maintenance schedules include regular "housekeeping" at observing sites (for example, grass cutting and cleaning of exposed instrument surfaces) and manufacturers’ recommended checks on automatic instruments. Routine quality control checks carried out at the station or at a central point should be designed to detect equipment faults at the earliest possible stage. Depending on the nature of the fault and the type of station, corrective maintenance (instrument replacement or repair) should be conducted according to agreed priorities and timescales. As part of the metadata, it is especially important that a log be kept of instrument faults, exposure changes, and remedial action taken where data are used for climatological purposes.

Further information on station inspection and management can be found in WMO (2010c).

Fiebrich et al (2006) summarized the frequency of routine maintenance for a sample of networks in the U.S. The following table illustrates the frequency of routine maintenance across networks:

| Network | Number of Sites | Frequency of Routine Maintenance |

|

AgirMet (P. Palmer 2004, personal communication) |

72 |

Annual, in spring |

|

ASOS (ASOS Program Office 1998) |

882 |

90 days* |

|

California Irrigation Management Information System (CIMIS; www.cimis.water.ca.gov2004) |

120 |

3 to 6 weeks |

|

Climate Reference Network (NOAA 2003) |

59+ |

30 days** |

|

Iowa Department of Transportation Road Weather Information System (maintenance contract available online at www.aurora-program.org) |

50 |

Annual |

|

Nebraska Automated Weather Data Network (G. Roebke 2004, personal communication) |

59 |

Annual |

|

Oklahoma Mesonet (Brock et al. 1995) |

116 |

Spring, summer, and fall |

|

Pennsylvania Department of Transportation Road Weather Information System (maintenance contract available online at www.aurora-program.org) |

49 |

Annual |

|

SNOTEL (J. Lea 2004, personal communication; G. Shaefer 2005, personal communication) |

706 |

Approximately annual |

|

West Texas Mesonet (Schroeder et al. 2005) |

40 |

60 days |

*Some tasks are performed every 90 days; other tasks are performed seminannually.

**Oversight by site host is performed every 30 days. Routine maintenance is performed by a technician on an annual basis.

Note: After this table was published, the Nebraska Automated Weather Data Network has been more commonly referred to as the Nebraska Mesonet, and the number of sites they have has increased to 68 (per personal communication with Stonie Cooper, Nebraska Mesonet Manager, April 2018).

In addition to the information stated in the table above, the New York State Mesonet reported that they have regular spring and fall passes, as well as frequent summer visits to keep the grass cut. They visit their non-standard sites (such as profile sites) approximately every 60 days.

Not only do you need to schedule your routine maintenance tasks, but you’ll want to develop a procedure for handling these tasks. In this manner, your servicing will be consistent among stations, equipment, and technicians.

The following examples underscore the importance of routine, preventive maintenance and describe how other organizations have generally addressed the issue of maintenance scheduling for their mesonets. In particular, considerations for sensor rotation, individual network maintenance practices, and repair turn-around times for each measurement variable are discussed.

Arizona Meteorological Network (AZMET)

Timely maintenance is another aspect of quality control. Maintenance can be separated into two categories: technical and nontechnical. Technical maintenance involves repair and replacement of sensors and equipment, and is intimately tied to the calibration process. The only effective maintenance program for an Automated Weather Station is one based on preventive maintenance-that is, a maintenance program that rotates sensors, data loggers, and communications equipment before their performance degrades. It is essential to establish firm equipment rotation schedules based on manufacturers' recommendations, local network experience, and the experience of other networks. A network must maintain a considerable inventory of spare equipment as well as personnel capable of repairing degraded equipment to employ an effective preventive maintenance program. Most networks find technical maintenance is best performed by internal network personnel; success of technical maintenance performed by cooperators varies.

Nontechnical maintenance generally falls into the category of site maintenance. Station sites may require mowing, weeding, irrigation, and fence repair. Often, personnel responsible for nontechnical maintenance also clean and level sensors such as pyranometers, remove debris from rain gauge funnels, and listen for noisy bearings in rotating sensors such as anemometers. It is generally more cost effective to have local cooperators perform the nontechnical maintenance if stations are located well away from the network's center of operations.

Kansas Mesonet

Technicians from the Kansas State University Weather Data Library regularly visit each station a minimum of two times each year. Once during the Spring and again in the Fall. During these site visits sensor, data logger, communications, and power supply systems are inspected to ensure they are functioning according to Kansas Mesonet operation standards. When a sensor is not functioning properly it is replaced with a new one and returned to the manufacturer for repair and recalibration or recycled as electronic waste. During each visit the overall site conditions (e.g. fencing, immediate area grass cover, surrounding fetch areas) are inspected to ensure accurate and representative measurements.

Kentucky Mesonet

Kentucky Mesonet technicians visit each station a minimum of three times a year: Spring, Summer, and Fall. During each scheduled maintenance visit, technicians perform the required tasks to keep the instrumentation within manufacturer specifications. When a sensor fails this test, it is brought back to the Kentucky Mesonet instrumentation laboratory to be recalibrated or decommissioned. Due to the design of the weighing bucket precipitation gauges, antifreeze must be added to the bucket during the fall site visit before the first expected freeze. The antifreeze is later removed during the spring site visit.

Oklahoma Mesonet

Sensors require routine maintenance and scheduled calibration that, in some cases, can be done only by the manufacturer. Ideally, maintenance and repairs are scheduled to minimize data loss (for example, snow-depth sensors repaired during the summer) or staggered in such a way that data from a nearby sensor can be used to fill gaps. In cases in which unscheduled maintenance is required, stocking replacement parts onsite ensures that any part of the network can be replaced immediately. The Oklahoma Mesonet provides this perspective:

The primary focus of network operations and maintenance is to obtain research-quality observations in real-time. From the receipt of a sensor from a vendor to the dissemination of real-time and archived products, the Oklahoma Mesonet follows a systematic, rigorous, and continually maturing protocol to verify the quality of all measurements. Quality-assurance consists of (1) proper site selection and configuration to minimize network inhomogeneities; (2) calibration and comparison of instrumentation before and after deployment to the field; (3) three scheduled annual maintenance passes; (4) automated quality assurance procedures performed as data are collected (repeated several times throughout the day and through subsequent days); and (5) manual inspection of the data (Shafer et al. 2000).

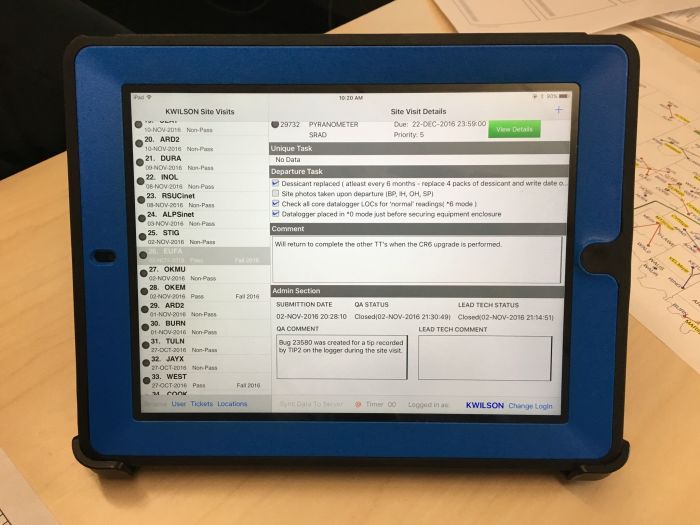

During each of three, scheduled annual maintenance passes, Oklahoma Mesonet technicians perform standardized tasks, including cleaning and inspecting sensors, verifying depth of subsurface sensors, cutting and removing vegetation, taking photographs, and conducting on-site sensor calibrations (Fiebrich et al. 2006). In addition, technicians may upgrade equipment, rotate sensors, and perform communications testing and maintenance. Site visit forms and photo documentation are posted on the Mesonet website to make it easy for anyone using the data to investigate a site’s maintenance history. The automated quality assurance software of the Oklahoma Mesonet is designed to detect significant errors in the real-time data stream and to incorporate manual QA flags that capture subtle errors in the archived dataset. Observations are never altered; each datum is flagged as "good," "suspect," "warning," or "failure." Only "good" and "suspect" data are delivered in real time to users. For real-time data, up to eight QA tests are run per observation, operating on the past six hours of data. Once per day, up to 13 QA tests are run on each variable, operating on the past 30 days of data. As a result, more than 111 million calculations are completed daily. Currently, real-time QA completes within approximately one minute.

Furthermore, the Oklahoma Mesonet provides this information regarding the work of their technicians:

Whether for routine maintenance or for emergency repairs, the Mesonet technicians complete a site visitation report that details the date and time of the visit, as well as the type of work performed. During routine site visits, Mesonet technicians perform sensor inter-comparisons via a portable calibration kit (Fiebrich et al. 2004). The intercomparison system generates statistics which describe the difference between the station sensor and reference sensor observations. The field intercomparison report provides an abundance of information to the QA meteorologist, including indications of small sensor biases or drift.

The Oklahoma Mesonet makes use of preventive site visits to ensure the highest data quality:

Of equal importance, sensor cleaning, inspection, testing, and rotation are required frequently throughout the year. It is difficult to assess the value to data quality of performing these systematic tasks during the passes. Because the Oklahoma Mesonet is an operational network rather than a research network, the focus of its administrators is to disseminate the highest quality data possible to real-time users. In practice, the more often sensors are cleaned and inspected, the better they will perform. Hence, for cost efficiencies, the sensor tests and rotations are completed during the same site visit as that for vegetation maintenance. Although the above reasons encourage frequent visits during the growing season, financial considerations constrain the frequency to no more than three routine visits annually. Four full-time technicians are required to visit all stations across the state during a 3-month period (i.e., the length of a seasonal maintenance pass). Ongoing annual costs of the maintenance visits include four salary lines, maintenance for four vehicles, replacement of one vehicle, and travel expenses. Note that emergency site visits (resulting from sensor biases, sensor failures, lightning strikes, or communication failures) add two to three more visits per site per year. Table 2 lists the maximum time allowed for a technician to resolve emergency site or sensor problems detected by the Mesonet’s quality assurance system.

| Measurement | Repair Time |

|

Air temperature at 1.5 m |

10 business days |

|

Solar radiation |

10 business days |

|

Relative humidity |

10 business days |

|

Wind direction |

10 business days |

|

Wind speed at 10 m |

10 business days |

|

Pressure |

10 business days |

|

Rainfall |

10 business days |

|

Soil temperature at 10 cm |

10 business days |

|

Air temperature at 9 m |

20 business days |

|

Wind speed at 2 m |

20 business days |

|

Soil temperatures at 5 and 30 cm |

20 business days |

|

Soil moisture at 5, 25, and 60 cm |

20 business days |

|

Wind speeds at 4 and 9 m |

30 business days |

|

Soil heat flux |

30 business days |

|

Skin temperature |

30 business days |

|

Net radiation |

30 business days |

|

Experimental observation |

30 business days |

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

Guide to the Global Observing System, WMO-No. 488, Edition 2010, (Geneva, Switzerland: World Meteorological Organization, 2010).

Janet E. Martinez, Christopher A. Fiebrich, and Renee A. McPherson, “The Value of Weather Station Metadata,” (conference paper of the 15th Conference on Applied Climatology, Savannah, Georgia, June 23, 2005).

John L. Campbell, Lindsey E. Rustad, John H. Porter, Jeffrey R. Taylor, Ethan W. Dereszynski, James B. Shanley, Corinna Gries, Donald L. Henshaw, Mary E. Martin, Wade M. Sheldon, and Emery R. Boose, “Quantity is Nothing without Quality: Automated QA/QC for Streaming Environmental Sensor Data,” BioScience 63, no. 7 (2013): 574-585. https://doi.org/10.1525/bio.2013.63.7.10.

Kenneth G. Hubbard and M. V. K. Sivakumar, Automated Weather Stations for Applications in Agriculture and Water Resources Management: Current Use and Future Perspectives (proceedings of an international workshop, Lincoln, Nebraska, March 6-10, 2000). http://www.wamis.org/agm/pubs/agm3/WMO-TD1074.pdf.

“Overview,” Kansas State University, accessed June 19, 2017, http://mesonet.k-state.edu/about/.

Ronald L. Elliott, Derek S. Arndt, Mark A. Shafer, Noel I. Osborn, and William E. Lawrence, “The Oklahoma Mesonet: A Multi-Purpose Network for Water Resources Monitoring and Management” (conference paper of the Hazards in Water Resources, Boise, Idaho, July 23-26, 2007). http://opensiuc.lib.siu.edu/cgi/viewcontent.cgi?article=1019&context=ucowrconfs_2007.

“Site Maintenance,” Kentucky Mesonet, accessed June 20, 2017, http://l.kymesonet.org/instrument.html#!sitemaint.

Steven J. Meyer and Kenneth G. Hubbard, “Nonfederal Automated Weather Stations and Networks in the United States and Canada: A Preliminary Survey,” Bulletin of the American Meteorological Society 73, no. 4 (1992): 449-457. http://journals.ametsoc.org/doi/pdf/10.1175/1520-0477(1992)073%3C0449:NAWSAN%3E2.0.CO%3B2.

Considerations that Affect Your Maintenance Schedule

You may have discovered that the more you outline the routine maintenance schedule of your mesonet, the more variables there are that impact your schedule and need to be taken into consideration. Some of these considerations are briefly described in the following sections.

Number of stations and distance between them

Whether your mesonet consists of 10 or 100 automated weather stations, some level of service and periodic maintenance is needed. Obviously, the more stations you have, the more resources are needed to visit the stations, inspect the equipment, and repair or replace the equipment. In addition, consider the travel time required for technicians to routinely visit the stations spread across your mesonet.

Site access

Typically, many mesonet stations are located on private property, which may cause accessibility issues. For example, to maintain goodwill with the landowner, it may be necessary to limit the frequency of site visits for maintenance and service. In contrast, automated weather station sites that are located on university property may be easier to access, thus allowing for more frequent access and more flexible maintenance schedules.

Sensor design and performance

The design and performance characteristics of sensors can affect the frequency of needed maintenance. If instruments are designed correctly for use in the intended environment, they should be able to operate in the field unattended for months to years in duration. The sensor should hold its calibration characteristics between servicing intervals. Also, to ensure ongoing data quality, the sensor should be designed so that it can be easily serviced and/or swapped out in the field for a recently calibrated sensor of the same model and manufacturer.

Maintenance intervals are dependent upon the characteristics of the instruments. The World Meteorological Organization offers this:

The most important requirements for meteorological instruments are the following:

- Uncertainty, according to the stated requirement for the particular variable;

- Reliability and stability;

- Convenience of operation, calibration and maintenance;

- Simplicity of design which is consistent with requirements;

- Durability;

- Acceptable cost of instrument, consumables and spare parts;

- Safe for staff and the environment.

With regard to the first two requirements, it is important that an instrument should be able to maintain a known uncertainty over a long period. This is much better than having a high level of initial confidence (meaning low uncertainty) that cannot be retained for long under operating conditions.

Sensor durability and budget

Quality control and maintenance decisions begin with buying quality equipment for your mesonet. The World Meteorological Organization offers this perspective:

Simplicity, strength of construction, and convenience of operation and maintenance are important since most meteorological instruments are in continuous use year in, year out, and may be located far away from good repair facilities. Robust construction is especially desirable for instruments that are wholly or partially exposed to the weather. Adherence to such characteristics will often reduce the overall cost of providing good observations, outweighing the initial cost.

Meteorological sensors that are exposed to the environment and a range of contaminants day in and day out, year-round, may experience sensor drift over time. In addition, sensors located in dirty or corrosive environments may require more frequent servicing. Unfortunately, financial considerations may constrain the frequency of site visits to no more than two or three visits annually, thus affecting the quality of the data obtained. Moreover, funding restrictions may even influence, or perhaps dictate, the purchase of less expensive, lower-quality equipment. These equipment costs, however, are generally minor when compared to the long-term operating costs of equipment in the field. Sensors that fail or lose calibration quickly can generate tremendous operating costs for a network.

For ongoing budget assessments, it is critical to include the periodic replacement and upgrade of equipment (such as sensors) as anticipated costs for mesonet stations.

Specific sensor types

Depending on the sensor type, different maintenance routines and schedules may be needed. The following sections highlight some typical maintenance considerations for common sensor types used in mesonets.

Air temperature sensors

Thermistors and platinum resistance thermometers (PRTs) are common air temperature sensors used in mesonet stations. The output signal of these sensors is based on the electrical resistance of the sensing circuit that corresponds to the ambient temperature. Factory calibration procedures generate a mathematical curve that relates resistance to air temperature over the measurement range of the sensor. Temperature sensors should be checked or calibrated every year or two to check for drift and to validate the calibration curve equation.

The Quality Assurance Handbook for Air Pollution Measurement Systems, Volume IV Meteorological Measurements offers a discussion on calibration procedures.

Relative humidity sensors

Relative humidity sensors are based on an electronic component that absorbs water vapor according to air humidity and changes its electrical impedance (resistance or capacitance). This type of sensor usually consists of a probe attached directly, or by a cable, to an electronics unit that records the relative humidity reading.

The screen covering the tip of the probe (composed of metal, GORE-TEX, etc.) should be checked for contaminants. When a relative humidity sensor is installed near the ocean or other body of salt water, a coating of salt (mostly NaCl) may build up on the radiation shield, sensor, filter, and even the chip.

The humidity over a saturated NaCl solution is 75 percent. A buildup of salt on the filter or humidity chip will delay or destroy the response to atmospheric humidity. To remove the buildup, rinse the filter gently in distilled water. If necessary, the chip from some sensors can be removed and rinsed as well. (It might be necessary to repeat rinsing of the chip.)

Caution: Touching the humidity chip can damage the sensing element produced by some manufacturers.

Long-term exposure of the relative humidity sensor to certain chemicals and gases may affect the characteristics of the sensor and shorten its life. The resistance of the sensor depends strongly on the temperature and humidity conditions and the length of the pollutant influence. Calibrate the sensor annually.

Resource: For more information about combination air temperature and relative humidity sensors, read the “Air Temperature and RH Sensors: What You Need to Know” blog article.

Radiation shields for air temperature and relative humidity sensors

Radiation shields are essential for the accurate measurement of ambient air temperature. The two types of radiation shields are naturally aspirated and mechanically aspirated:

- Naturally aspirated shields have a louvered construction that allows air to pass freely through the shield, keeping the probe at or near ambient temperature. The shield's white color reflects solar radiation.

- Mechanically aspirated radiation shields use a blower requiring external power. The shield draws ambient air over the sensor while protecting the sensor from the interference of solar radiation. The result is a more accurate temperature and humidity measurement.

Check the radiation shield monthly to ensure it is free from dust and debris. To clean the shield, first remove the sensor. Then, dismount the shield, and brush off all the loose dirt. If additional cleaning of the shield is needed, use warm, soapy water and a soft cloth or brush. Allow the shield to dry before remounting.

Radiation shields may be prone to interference by insects. For example, wasps may make nests or spiders may build webs inside the shields. Remove any blockages that may restrict air flow.

Additional maintenance tasks for radiation shields include cleaning and tightening. Inspect and clean both the shield and probe periodically to maintain optimum performance. When the shield becomes coated with a film of dirt, wash it with mild soap and warm water. Use rubbing alcohol to remove any oil film. Do not use any other solvent. Also, check the mounting bolts periodically for possible loosening caused by tower vibration.

Mechanical wind speed and direction sensors

The frequency with which a mechanical wind sensor needs to be maintained depends upon the environment in which the automated weather station is located:

- For a windy, dusty environment, the sensor requires maintenance once every six months.

- For an environment with a moderate amount of wind, sensor maintenance is required once a year.

- For an environment with minimal wind, you may only need to perform sensor maintenance once every two years.

Because maintenance of these sensors often requires replacement of the anemometer bearings and potentiometer, it is recommended that this maintenance be performed by a trained electronics technician.

Ultrasonic wind speed and wind direction sensors

If there is any buildup of contamination on the sensor, it should be gently cleaned with a cloth moistened with soft detergent. Solvents should not be used, and care should be taken to avoid scratching any surfaces. If the sensor is exposed to snow or icy conditions, it must be allowed to defrost naturally.

Caution: Do not attempt to remove ice or snow using a tool, as it may damage the sensor.

Ultrasonic wind speed and wind direction sensors have no moving parts or user-serviceable parts requiring routine maintenance. Opening the unit or breaking the security seal will typically void the warranty and the calibration. In the event of failure, prior to returning the sensor to the manufacturer, check all cables and connectors for continuity, bad contacts, corrosion, etc. When a sensor is returned to the manufacturer for servicing, a bench test is carried out, as described in the sensor manual.

Solar radiation sensors

Once a month, inspect the solar radiation sensor. As needed, clean it using a soft, clean, damp cloth. If necessary, adjust the sensor to ensure it is level. Once every two to three years, have the solar radiation sensor recalibrated. For this calibration service, you will need to remove the sensor and send it to the manufacturer for calibration against traceable instrument references.

Precipitation sensors

Precipitation sensors are often rain gages that may have snowfall adapters. The two types of electronic precipitation sensors most commonly found in unattended automated weather stations use either a weighing mechanism or a tipping bucket. Tipping buckets are commonly used in U.S. climatological networks because of their lower cost and simplicity. Weighing gages are typically two to three times costlier than tipping bucket gages, but their accuracy does not depend on rainfall intensity. Moreover, their resolution is limited only by the mechanical sensitivity of the platform and displacement sensor.

Standard maintenance of rain gages includes the removal of debris from the gages and periodic validation checks to ensure that the sensors are properly reporting data.

The following is an example of a field check:

To test the rain gauge, the technician drips a known quantity of water from a specially designed reservoir into the gauge at a known constant rate. The gauge’s response is compared to its calibration data from the laboratory records. The technician then partially disassembles the gauge, visually inspects, cleans, and reassembles it, and repeats the calibration test.

Once every five years, have the rain gage sensor recalibrated. You will need to remove the sensor and return it to the manufacturer.

Barometric pressure sensors

Because electronic barometric pressure sensors are semi-sealed, minimum maintenance is required.

Typical maintenance includes:

- Visual inspection of the cable connection to ensure it is clean and dry

- Visual inspection of the casing for damage

- Assurance that the pneumatic connection and pipe are secure and undamaged

The external case can be cleaned with a damp, lint-free cloth and a mild detergent solution. Under normal use, recalibration intervals are typically every two years. In site environments where many contaminants are present, recalibration every year is recommended.

Soil temperature and soil moisture sensors

Independent sensors that measure soil temperature typically require minimal maintenance after they are installed. If incorrect temperature readings occur, validate the software coefficients and ensure there are mechanical connections to the excitation voltage and input channels. (The wires for the analog sensors must be in contact with the screw terminal and not the wire insulation.) Periodically check the cabling for signs of damage (such as being chewed on by rodents) and possible moisture intrusion.

Soil temperature and soil moisture (or soil volumetric water content) probes typically do not need recalibration after they are installed. If more accuracy is required by the application, sensors can be individually calibrated to remove any offset in the measurement.

Note: Barring catastrophic failures, it is recommended to leave soil moisture or soil temperature sensors undisturbed after they are buried. Disturbing the soil can greatly impact the quality of measurements related to temperature gradients and water movement in the soil.

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

Donna F. Tucker, “Surface Mesonets of the Western United States,” Bulletin of the American Meteorological Society 78 (1997): 1485–1495. https://doi.org/10.1175/1520-0477(1997)078<1485:SMOTWU>2.0.CO;2.

G. Stanhill, “Accuracy of global radiation measurements at unattended, automatic weather stations,” Agricultural and Forest Meteorology 61, no. 1-2 (1992): 151-156. https://doi.org/10.1016/0168-1923(92)90030-8.

Guide to the Global Observing System, WMO-No. 488, Edition 2010, (Geneva, Switzerland: World Meteorological Organization, 2010).

Kenneth G. Hubbard and M. V. K. Sivakumar, Automated Weather Stations for Applications in Agriculture and Water Resources Management: Current Use and Future Perspectives (proceedings of an international workshop, Lincoln, Nebraska, March 6-10, 2000). http://www.wamis.org/agm/pubs/agm3/WMO-TD1074.pdf.

Mark A. Shafer, Christopher A. Fiebrich, Derek S. Arndt, Sherman E. Fredrickson, and Timothy W. Hughes, “Quality Assurance Procedures in the Oklahoma Mesonetwork,” Journal of Atmospheric and Oceanic Technology 17, no. 4 (2000): 474-494. http://cig.mesonet.org/staff/shafer/Mesonet_QA_final_fixed.pdf.

Quality Assurance Handbook for Air Pollution Measurement Systems Volume IV: Meteorological Measurements, Version 2.0, Final (Research Triangle Park, North Carolina: U.S. Environmental Protection Agency, 2008). https://www.osti.gov/biblio/7092387.

Stephanie Bell, “The beginner’s guide to humidity measurement” (London: National Physical Laboratory, 2011). http://www.npl.co.uk/content/ConPublication/7468.

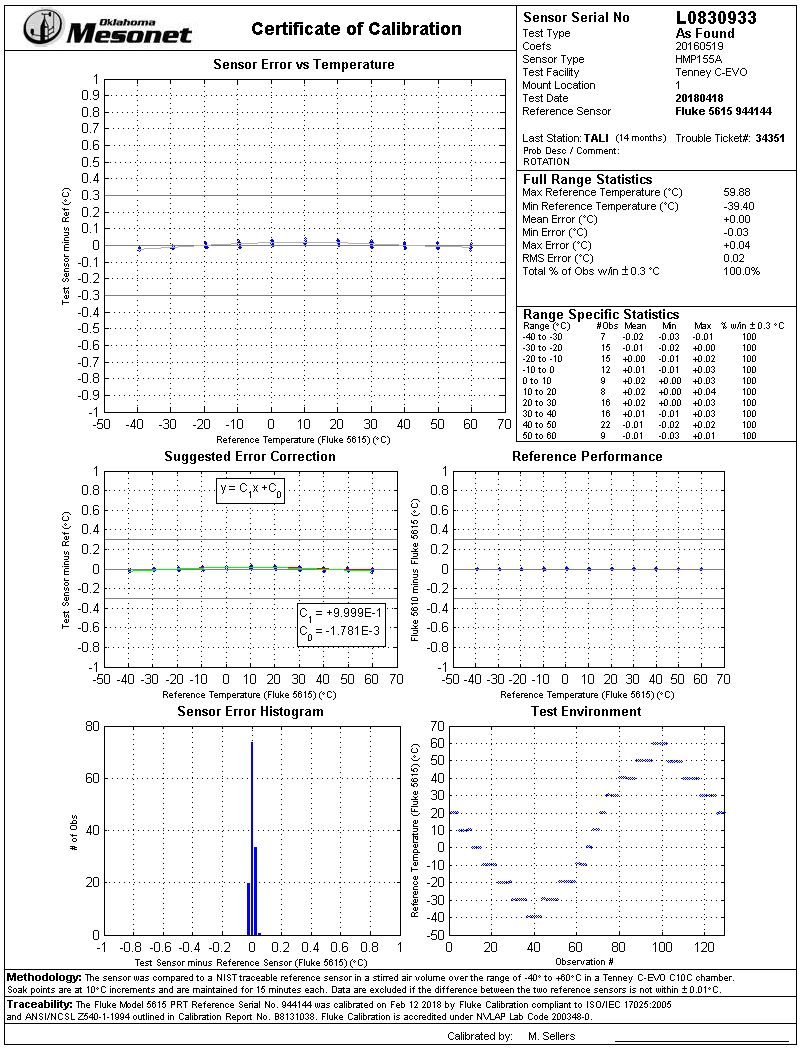

Calibrating Your Sensors

Sensor calibration is a task commonly included in a mesonet maintenance schedule to provide better sensor performance and better measurement data. Calibration is the comparison of a sensor against a reference value. A calibration certificate reports any sensor errors and provides the uncertainty in those errors. Calibration corrections then need to be applied to the sensor readings (or noted as a source of uncertainty). With any measurement, the practical uncertainty in using the sensor is always more than on the calibration certificate.

(Image courtesy of David Grimsley, Calibration Laboratory Manager, Oklahoma Mesonet)

(Image courtesy of David Grimsley, Calibration Laboratory Manager, Oklahoma Mesonet)Proper calibration is essential to data quality control, and most networks use two types of calibration procedures: standards calibration and on-site calibration [sic]. Standards calibration is generally completed at some form of calibration facility where network sensors are compared against "standard" network sensors. It is best to check sensors over the expected dynamic range of the parameter in question. Few networks possess true traceable calibration standards, but instead opt to use as "standards" high-quality sensors that are periodically returned to the manufacturer or an independent facility for calibration against traceable standards. Some networks choose to send some or all of their sensors to manufacturers for calibration, but this can be a costly process.

On-site calibration [sic] consists of comparing weather station sensors with "travel standards" over rather short periods of time. Travel standards are mounted at the site in a manner similar to permanent station sensors, and the outputs from the standard and station sensors are compared. Station sensors are replaced if their outputs deviate markedly from those of the travel standards.

Note: In the quote above, the use of the phrase “on-site calibration” would more commonly and properly be referred to as “on-site validation" or "on-site performance audit." Generally, “calibration” is used to refer to actions performed in a lab or controlled environment, whereas “validation” occurs onsite, in the field.

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

Kenneth G. Hubbard and M. V. K. Sivakumar, Automated Weather Stations for Applications in Agriculture and Water Resources Management: Current Use and Future Perspectives (proceedings of an international workshop, Lincoln, Nebraska, March 6-10, 2000). http://www.wamis.org/agm/pubs/agm3/WMO-TD1074.pdf.

Calibrating Your Data Loggers

Calibration services are an important part of maintaining data logger measurement fidelity in a mesonet. Because analog measurement drift is influenced by the environment, it is difficult to give an absolute guideline for recommended intervals of recalibration. It is possible that data loggers from some manufacturers can still be within specifications after five or six years if used in moderate conditions. Other manufacturers’ data loggers, in contrast, may, when operated in extreme environments, become outside of specifications within a year or two.

By comparing the before-and-after calibration readings, you can determine how often recalibration should occur. A recommended approach is to recalibrate after two years and then extend or reduce the time interval based on the results of the recalibration.

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

“Repair Department,” Campbell Scientific, Inc., accessed June 20, 2017, https://www.campbellsci.com/repair-dept.

Replacement Equipment

To maintain the equipment used throughout your mesonet and prevent considerable downtime of your data collection, you will want to have sufficient replacement equipment on hand. This includes user-replaceable parts, as well as extra quantities of equipment that is not user-serviceable and would need to be sent back to the factory for repair or recalibration. To make your task easier and less costly, it is advisable to use the same equipment from one automated weather station to the next. For example, use the same model of soil moisture and temperature sensor for all of your mesonet stations.

By equipping operational sites with similar sensors, the potential of measurement bias, when comparing measurements between sites, is reduced. This strategy also allows technicians to draw from a common stock of spare sensors when sensor replacement is required. By using similar instruments at all field sites, a user does not need to be concerned by different instrument error characteristics when comparing data between sites.

When you are determining how much inventory for user-replaceable parts and extra equipment you should have on hand, your budget constraints play a significant role. The following are some additional factors you may wish to consider:

- The likelihood that your equipment will be vandalized by people or damaged by animals or birds

- The likelihood of extreme weather (such as lightning, tornadoes, or hail) affecting the mesonet area and causing damage to your equipment

- The quality and durability of your equipment and the likelihood that it will fail

- Your downtime tolerance and the tolerance level of your stakeholders for bad or missing data in the data products you provide them with

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

Mark A. Shafer, Christopher A. Fiebrich, Derek S. Arndt, Sherman E. Fredrickson, and Timothy W. Hughes, “Quality Assurance Procedures in the Oklahoma Mesonetwork,” Journal of Atmospheric and Oceanic Technology 17, no. 4 (2000): 474-494. http://cig.mesonet.org/staff/shafer/Mesonet_QA_final_fixed.pdf.

Managing Your Equipment Inventory

The inventory for your mesonet may include recalibration equipment, user-replaceable parts, and replacement equipment. If you have quantities of all this equipment on hand for the multitude of stations in your mesonet, it’s easy to see that you can have a lot of equipment to keep track of over the course of years or decades. For example, you’ll want to know if a piece of equipment is at a station site or is being repaired at the manufacturer’s facility. As another example, you’ll want to know if a type of sensor at a particular site keeps needing to be replaced, indicating that there may be an unforeseen site condition causing equipment failure.

For climate applications, the demanding requirement for sensor traceability (documenting the history, location, and application of a sensor) is critical because of the need to detect small trends over long periods and to understand the potential effect of the deployment lifecycle of a sensor. For example, if a significant change in a climate variable (such as temperature) is recorded, you will want to know if that data represents an actual temperature phenomenon or if it may be indicative of a troublesome sensor that was recently added to a site to replace a sensor that needed recalibration. The troublesome sensor would then need to be repaired, or removed from service, to terminate the collection of inaccurate data.

The World Meteorological Organization recommends tracking instrument location as well:

It is especially important to minimize the effects of changes of instrument and/or changes in the siting of specific instruments. Although the static characteristics of new instruments might be well understood, when they are deployed operationally they can introduce apparent changes in site climatology. In order to guard against this eventuality, observations from new instruments should be compared over an extended interval (at least one year; see the Guide to Climatological Practices (WMO, 2011a)) before the old measurement system is taken out of service. The same applies when there has been a change of site. Where this procedure is impractical at all sites, it is essential to carry out comparisons at selected representative sites to attempt to deduce changes in measurement data which might be a result of changing technology or enforced site changes.

Tools for inventory management

To track your abundant equipment inventory for your mesonet, the following are some helpful tools you can use:

- A tagging system for each piece of equipment, such as barcodes or serial numbers

- A method to record the identifying information for the equipment, such as a barcode reader, spreadsheet, mobile app, and/or database

- A method to record metadata for each station/site, such as when a sensor was replaced or recalibrated

- A reminder system that alerts you to upcoming maintenance dates for recalibrations or battery replacements

- A low-inventory warning that notifies you when you may want to reorder equipment

Some intelligent sensors can store calibration coefficients on the sensor along with the sensor serial number. Other sensors that have analog outputs require the performance and calibration data to be stored in a database program. The Oklahoma Mesonet has a regimented system for capturing metadata from their 3,300 sensors:

Historical calibration and coefficient data for sensors are another essential part of the Oklahoma Mesonet’s QA system. Sensor and equipment metadata include serial number, vendor, manufacturer, model, cost, and the dates the sensors were purchased, commissioned, or decommissioned. The Mesonet calibration laboratory manager archives this information in an online database. The database also archives the sampling interval, measurement interval, measurement unit, and installation height for each variable. In addition, the lab manager documents the calibration characteristics of each sensor both before the sensor is deployed to the remote station and immediately after the sensor is returned from the field. Since a sensor may have numerous coefficients during its lifetime, the database stores the coefficients along with the date of calibration.

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

Additional Resources for Your Maintenance Tasks

To perform your maintenance tasks, you will need equipment directly related to those tasks. For example, to replace batteries, you will need spare batteries. In addition, you will need a variety of tangible resources and human resources. Making these resources readily available will aid you considerably in the efficient execution of your maintenance tasks. The following sections describe tangible resources you can use for your maintenance tasks.

Sensor-specific tools

The following tools will help you with the maintenance of specific sensor types:

- Air temperature

- Environmental chamber for use under computer control to validate temperature signal output, as well as to validate sensor response versus temperature

- For sealed probes, access to a glycol or ice bath for validation of temperature performance at a few data points

- Relative humidity

- Dew point generator with a manifold to accommodate multiple sensors

- Wind speed

- Access to a wind tunnel or anemometer drive, providing a convenient and accurate method to rotate an anemometer shaft at a known rate

- Solar radiation

- Secondary standard or recently calibrated sensor from same manufacturer

- Precipitation

- Calibration apparatus with known flow rate and volume

- Soil temperature

- Environmental chamber for use under computer control to validate temperature signal output, as well as to validate sensor response versus temperature

- For sealed probes, access to a glycol or ice bath for validation of temperature performance at a few data points

- Soil moisture

- Water matric probes to measure the matric potential via the heat dissipation method; can immerse the probe in distilled water for five days to achieve the wet point and then seal it in desiccant for five days to achieve the dry point

Onsite intercomparison and validation

Mesonet instruments deployed in the field require periodic field validation with co-located instruments (preferably identical) for dynamic intercomparison. As an example of field intercomparison, the Oklahoma Mesonet does the following:

Oklahoma Mesonet personnel properly calibrate each sensor before deployment at a remote site (Shafer et al. 2000). However, instrument drift is a major cause of poor quality data (Hollinger and Peppler 1995). Thus, to examine sensor accuracy during a sensor’s lifetime—a critical step to verifying data quality in the field (Brock and Richardson 2001)—Mesonet personnel have developed a portable system…to perform standardized field comparisons.

(Photo courtesy of RH Systems, Gilbert, AZ)

Observations from the air temperature, relative humidity, solar radiation, and pressure sensors are compared to calibrated reference sensors…The system includes an integrated aspirator to provide homogeneous air volume for both the reference and station temperature and humidity sensors. Customized Palm OS software (PalmSource, Inc.) on a personal data assistant collects and analyzes these comparison observations. In addition to displaying comparison data for on-site evaluation, the software also generates a detailed report (see Table 6) for analysis by the quality assurance meteorologist. The system requires minimal interaction by field personnel and communicates automatically with the station datalogger when connected. The system is expandable so that other station sensors can be compared as needed. The in-field intercomparisons provide two distinct benefits: 1) they identify subtle sensor problems, and 2) they provide guidance for determining the true start time of data quality problems. The errors tolerated during the field sensor intercomparisons are listed…These thresholds were based on approximately 350 field intercomparison tests completed at Oklahoma Mesonet sites. Using these thresholds during the 2004 site passes, 10 sensors were determined to have drifted out of tolerance. Those sensors included four relative humidity sensors, two pyranometers, and four air temperature sensors. Even more important than on-site error identification, the intercomparison tests create a wealth of metadata and statistics for the quality assurance meteorologists of the Oklahoma Mesonet. When a new data quality problem is discovered by either automated or manual techniques (Martinez et al. 2004; Shafer et al. 2000), the intercomparison reports provide a baseline for helping to determine how far back to manually flag the data as erroneous. For instance, if one of the components of the Mesonet’s quality assurance system identifies a 6% relative humidity bias at a station, the previous intercomparison reports from the station are used to determine whether the drift increased steadily with time or occurred abruptly.

Other equipment

The following is a list of common hardware tools used to install an automated weather station:

- User-supplied key for enclosure lock

- Magnetic declination angle for site (for aligning the wind direction sensor)

- Tape measure: 12 feet for a mounting structure 10 feet tall; 20 feet for a mounting structure 30 feet tall

- A set of open-ended wrenches: 3/8, 7/16, 1/2, and 9/16 in.

- Levels: 12 to 24 in. and 24 to 48 in.

- Pair of pliers

- Magnetic compass

- 12-inch pipe wrench

- Hammers: small sledge and claw

- Felt-tipped marking pen

- Socket wrench and 7/16 in. deep well socket

- Allen wrench set in SAE (Society of Automotive Engineers) units (fractions of an inch)

- Straight-bit screwdrivers: small, medium, and large

- Phillips-head screwdrivers: small and medium

- Small diagonal side-cuts

- Needle-nose pliers

- Wire strippers

- Pocket knife

- Calculator

- Volt/ohm meter

- Electrical tape

- 6-foot stepladder

- Data logger prompt sheet and manuals

- Station log and pen

- Wire ties and tabs

- Desiccant

To transport the equipment in the list above, as well as your equipment inventory, you will need vehicles that can store the equipment and traverse the terrain to your distant mesonet stations throughout the year.

Digital tools

Documenting field site characteristics initially and over time—preferably during scheduled site maintenance visits—is an important task. One of the tools used for this documentation is a digital camera. The Oklahoma Mesonet relies heavily on the use of digital photographs:

Digital photographs are some of the most critical pieces of metadata obtained during a site visit. During each pass, the technician takes an average of 14 standard digital photos of each Oklahoma Mesonet station to document the condition of the site and its surroundings. Field personnel photograph the soil temperature plots, soil moisture plots, soil heat flux plots, net radiometer footprint, full 10 m 10 m site enclosure, and surrounding vegetation. The vegetation conditions are photographed both upon the technician’s arrival and departure. Vegetation-height gauges, with alternating stripes every 10 cm, appear in appropriate photographs…Each year, the Mesonet archives more than 4000 digital pictures to aid in the documentation of station histories.

In addition to a digital camera, other digital tools that may help you when servicing and maintaining your mesonet stations include the following:

- Digital voltmeters can be used to measure resistance, current, and voltage for field and lab validation of sensors, data loggers, measurement and control peripherals, and power supplies.

- Oscilloscopes can provide validation of digital signals from sensor data lines, communication devices, and control peripherals.

- Environmental chambers are typically used under computer control to calibrate devices such as temperature and humidity sensors.

- Service monitor devices can be used to check the output (for example, frequency stability, deviation, and power output) from radio frequency devices such as VHF and UHF radios.

- Computing devices (such as personal computers, handheld mobile devices, and smartphones) can be used to run software that communicates with instruments, data loggers, and radio communication devices (such as cellular modems, programmable VHF and UHF radios, and data modems).

Campbell Scientific, Inc., "Weather Station Siting and Installation Tools" application note (1997). https://s.campbellsci.com/documents/us/technical-papers/siting.pdf.

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

Robert A. Baxter, "A Simple Step by Step Method for the Alignment of Wind Sensors to True North" (Earth System Research Laboratory, 2001).

Procedures for Handling Non-Routine Maintenance Issues

Not only is it important to have procedures for your routine maintenance tasks, but having a standard set of procedures in place for handling non-routine maintenance issues can help you consistently address the issues that arise—including emergency situations. For example, before sending a technician out to a problematic site, consider whether the issue can be investigated and resolved from the central mesonet office. If a technician does need to visit a site, the technician should have a troubleshooting checklist to follow with the most common troublesome issues listed first. At the site, the technician should complete a form (whether hard copy or digital) that documents the problem, probable cause, and resolution.

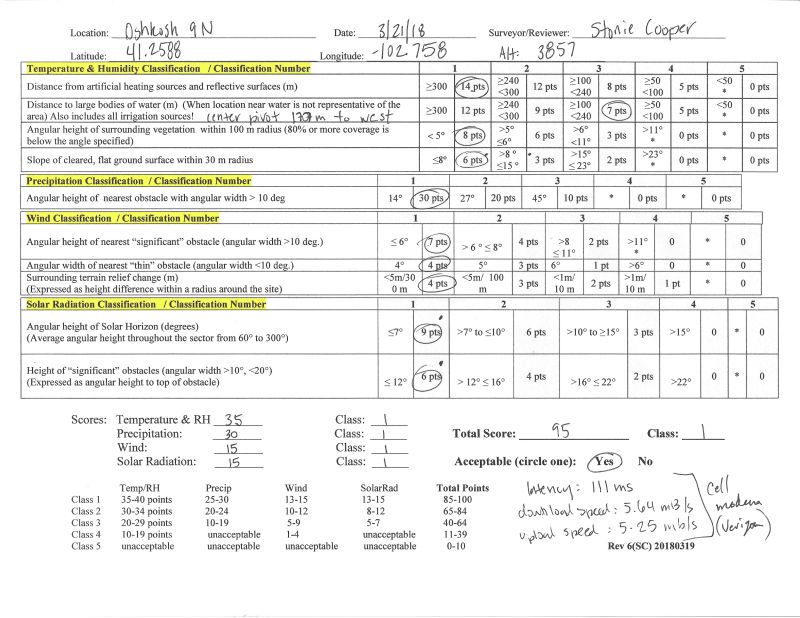

The following are recent site survey examples from spring field work at the Nebraska Mesonet. Site images taken at the same time are included as a part of their metadata.

(Image courtesy of Stonie Cooper, Mesonet Manager, Nebraska Mesonet)

In addition, consider what is an acceptable response time to have a non-routine maintenance issue resolved, as well as the tolerance level of your stakeholders for bad or missing data in the data products you provide them with.

The Kentucky Mesonet uses this procedure for documenting and resolving a maintenance issue:

Upon recognition of a problem, the quality assurance specialist issues a trouble ticket describing the situation. The ticket notifies the technicians of the problem and provides a deadline for the fix. When the technicians have finished the repair, the ticket is submitted back to the quality assurance specialist for approval and archival.

“Active Trouble Tickets,” Iowa State University, accessed June 20, 2017, https://mesonet.agron.iastate.edu/QC/tickets.phtml.

Christopher A. Fiebrich, David L. Grimsley, Renee A. McPherson, Kris A. Kesler, and Gavin R. Essenberg, “The Value of Routine Site Visits in Managing and Maintaining Quality Data from the Oklahoma Mesonet,” Journal of Atmospheric and Oceanic Technology 23, no. 3 (2006): 406-416. http://journals.ametsoc.org/doi/pdf/10.1175/JTECH1852.1.

Mark A. Shafer, Christopher A. Fiebrich, Derek S. Arndt, Sherman E. Fredrickson, and Timothy W. Hughes, “Quality Assurance Procedures in the Oklahoma Mesonetwork,” Journal of Atmospheric and Oceanic Technology 17, no. 4 (2000): 474-494. http://cig.mesonet.org/staff/shafer/Mesonet_QA_final_fixed.pdf.

“Site Maintenance,” Kentucky Mesonet, accessed June 20, 2017, http://l.kymesonet.org/instrument.html#!sitemaint.